Projects and Work Experience

Professional Experience

Sparkhound, LLC (November 2023 - PRESENT)

I am currently a member of the Sparkhound Web and Mobile team. Sparkhound is a workplace with a mix of fully-remote,

hybrid, and fully-onsite employees, with most employees based near one of the offices across the United States. This team works

directly with clients, as well as cross-departmentally with other Sparkhound teams such as Data & Analytics, UX/UI, and Cloud Services.

The team focuses on delivering custom web and mobile applications that meet client-specific business needs, leveraging modern

technologies, cloud infrastructure, and best practices in software development.

Within this team, I have been primarily assigned to work on client-facing applications built with C#/.NET and hosted on

Microsoft Azure. These applications included both web portals and mobile solutions that supported internal business operations,

workflow management, and data tracking. The applications integrate with cloud-based storage, databases, and other enterprise

systems, ensuring reliable performance, scalability, and security for Sparkhound clients across multiple industries.

Sparkhound utilizes Agile philosophies in its development lifecycle. During my time with the team,

we followed a two-week sprint cycle. Each sprint began with Sprint Planning, where backlog items were reviewed,

tasks were assigned, and story points were estimated using a Fibonacci system (1, 2, 3, 5, 8, 13, etc.).

Larger stories were broken down into smaller, manageable tasks. Daily SCRUM meetings allowed the team to discuss progress,

outline goals for the upcoming day, and address blockers or challenges in assigned tasks.

Due to client confidentiality, I will only discuss the top-level functionality of the applications.

The applications I worked on supported features including: user management, workflow and task tracking, reporting and analytics,

mobile notifications, and integration with internal and third-party data sources. These applications were used by client employees

to streamline processes, monitor business operations, and gain insights into operational and analytical data.

The team also works closely with Sparkhound’s cloud architects and data teams to integrate Azure services

such as App Services, Functions, Blob Storage, and SQL-based databases. This ensured applications were highly available,

secure, and optimized for performance. Additionally, the team emphasized maintainable code practices, automated testing,

and CI/CD pipelines using Azure DevOps, allowing for rapid deployment and continuous improvement.

As a fully remote member of the Sparkhound Web and Mobile team, I have worked as a full-stack developer

on both Sparkhound internal projects and contracts for external clients. I collaborated closely with a geographically

dispersed team that included remote, onsite, and hybrid members, ensuring seamless communication and project continuity

across multiple time zones.

I actively participated in the Agile development lifecycle across various projects, environments, and

clients. This included attending Sprint Planning sessions, daily SCRUM meetings, and retrospective discussions to

ensure proper task estimation, assignment, and tracking.

One of my major projects involved developing automated workflows to manage Microsoft licenses

for contractors. Using Power Automate, the PAX8 REST API, and custom scripts, I implemented processes to scale

licenses up or down as workforce sizes changed and to assign licenses to new contractors efficiently.

I utilized Azure DevOps extensively across various client spaces for task tracking, assignment,

and progress updates, ensuring full transparency and accountability within the team. This included breaking down

larger tasks into actionable subtasks, estimating completion times, and collaborating with team members to

resolve blockers.

I partnered with another developer to modernize a large set of C#/.NET repositories for a client,

which involved a longer time-frame contract for execution of this undertaking. The project included extensive

refactoring, improving code maintainability, and updating legacy functionality to align with current best practices.

I designed new database tables and backend relationships to support a new category of invoice

tracking within a client system. This included updating layouts, integrating new functionality, and ensuring

data integrity across the system.

- C#

- .NET

- Entity Framework

- SQL

- SQL Server Management Studio

- HTML

- CSS

- JavaScript

- TFS

- Git

- Visual Studio

- Azure

- Azure CLI

- Azure DevOps

- Azure App Services

- Azure SQL Databases

- Azure Blob Storage

- Azure Functions

- Azure Key Vault

- Azure Application Insights

- Azure Active Directory

- Azure Monitor

- Azure Logic Apps

- Azure API Management

- Azure Virtual Networks

- and other Azure Tools

- Agile Methodology

- Source Control Techniques and Best Practices

- Legacy Code Updates

- Software Development and Design

- Cooperation within a mixed remote, onsite, and hybrid teams, both internal and external clients

- Direct Communication and Cooperation with Clients/Customers

CAP Index, Inc. (April 2023 - October 2023)

I was a member of the CAP Index Development team. CAP Index is a fully-remote workplace, with most of the employees based around the Philadelphia metro area.

This team works directly with clients, as well as cross-departmentally with internal CAP Index Analytics and Marketing teams. CAP Index has created a scoring system for

retail locations. This score, known as a CAP Score, indicates the level of crime that is present at a location and in the surrounding area. The Analytics team works with

various crime data resources, including police data and a multitude of third-party providers, to create the most comprehensive data pool possible. This score is then used

by clients to determine many factors related to security, such as where to open a new potential store location or how much a client should invest in security measures

for an existing location. Within this team, I was primarily assigned to work with the applications that CAP Index supports for Walmart and Mcdonald's. These applications

are used by clients for their internal security tracking, including tracking of security surveys and event reports. I was also working with the main CRIMECAST application,

which is used both internally and by clients. All of these applications are .NET based applications, hosted by Microsoft Azure.

CAP Index utilizes Agile philosophies in their development lifecycle. During my time with the team, the team utilized a two-week sprint cycle. Every

other week an afternoon was reserved for Sprint Planning, where tasks were taken off the backlog, assigned to the sprint, and assigned story points. This process also

discussed acceptance criteria for stories and tasks. For story points, the team utilizes a Fibonacci pointing system (1, 2, 3, 5, 8, 13, etc.), with stories that are 13

points or higher are almost exclusively broken down into their parts to create more manageable-sized stories and tasks. After the team effort of planning and the team leads

have assigned out tasks, the rest of the Sprint Planning time is used by each team member to go through the tasks they have been assigned to estimate hours, plan out subtasks,

and ask for clarification or for any additional support needed for any story or task. During a sprint, the team has daily SCRUM meetings. These meetings are for each member to

briefly discuss progress made the last 24 hours, the plan for the upcoming day, and bring up any problems they may be facing in their assigned tasks.

Due to client confidentiality and the necessary consideration given to both CAP Index and their clients, I will only be discussing the most top-level features

of the applications and will not be delving into high detail or revealing any proprietary information from either CAP Index or any clients that they work with.

The Walmart client application is used internally by Walmart employees across many facets of the company. The application supports features that include:

requests for various types of security measures, reporting of security-related events, report creation on various topics related to security measures and incidents,

record keeping of the various forms and reports, and management of users who are authorized to use the application.

CAP Index supports two different applications for Mcdonald's: one is used by corporate-owned locations and the other is used by franchised owner-operator

locations. These applications serve a similar function to the application in the Walmart space, with variations that are specific to the needs of a restaurant compared to

a retail location.

The CRIMECAST system and application is the flagship application created and supported by CAP Index. This application is used by both external clients and

internal employees. The system allows for purchase and viewing of CAP Score Reports for a location, viewing crime data heat maps created by the CAP Scores and CRIMECAST data,

and for internal CAP Index employees to manage the reports and users of the CRIMECAST system.

The main application to which I was assigned was the Walmart application. Within my role, I was involved in the migration of version control measures from

TFS into Git. This transition also included discussions on best Git practices, which I was able to meaningfully contribute due to my previous work and school

experiences using Git and staying up-to-date on the use and evolving practices. While completing various tasks assigned to me, I was also active in cleaning up

any inline style or script elements. These elements belong in their own CSS or JavaScript files, with the exception of a few JavaScript functions which directly

interact with server-side variables. I was also responsible for making many small changes within the application, including things such as logic that made certain

options in a multiple-choice request form exclusive to each other, as they could not exist in the same context together.

One of the projects I was involved with in the Walmart application was improving the flow of the creation of the various

reports. Some of these reports pull from a large amount of data that is stored in the backend SQL databases. Due to this, some of the report creation methods

take minutes, rather than seconds, to complete. In these cases, it was decided that a message should be displayed to the user that the report creation has been

queued, and that they will receive an email once that report is available for view. For my tasks in this process, I moved the report creation logic to the internal

Queued Task project for the client, which runs concurrently with the main application. This Queued Task application handles the processing of longer-running methods

which need to be run in the background, rather than causing the front-end application to appear to "hang" for a user while that function runs. These tasks which move

the report generation to a Queued Task involved creating or modifying the logic to run as a Queued Task rather than an on-demand one, as well as the modifications to

the report generation database records, so that the report generation would be referencing the new Queued Task logic rather than the retired on-demand logic.

Within the Mcdonald's applications, the tasks which I was assigned were mostly small maintenance tasks such as verbiage updates. My role with this

application's development was that I should have an idea of the function and design of the application, but I was not one of the main developers who were most active in

most of the assigned work.

Within the CRIMECAST application, the main project which I completed was the ability for an internal CAP Index Admin-level user to mark all accounts within the

system as "Under Maintenance" so that applications updates or data refreshes could be done. Then once any maintenance work was completed, the Admin-level user could then

bring all of the accounts back out of the "Under Maintenance" status and back to normal functioning. I also completed several small maintenance tasks during my time working

with CAP Index, such as improvements to the UI for purchasing a new report.

- C#

- .NET

- Entity Framework

- SQL

- SQL Server Management Studio

- LinqPad

- HTML

- CSS

- JavaScript

- TFS

- Git

- Visual Studio

- Jira

- Agile Methodology

- Source Control Techniques and Best Practices

- Legacy Code Updates

- Software Development and Design

- Cooperation within a fully-remote team which was time-zone and continent separated

Pomeroy/IBM (January 2021 - March 2023)

I was a member of the US development team for the GPWS (Global Print Web System) applications. This team is responsible for several groups of applications

under the "Print @ IBM" umbrella.

The main application is the main GPWS application. This application allows for administration of the Print @ IBM printer fleet. Some of the uses of this tool

include MACD (Move, Add, Change, and Delete) operations on physical printers, administration of the VPSX servers that the printers are connected to, and administration of users

and their privileges within the various GPWS-related tools.

Secondary applications for the team are the Key User, Pager, Badge Registration, IVT (Invoice Verification Tools), and the Print @ IBM timekeeping system.

The Key User application is used by select administrators (i.e., Key Users) in the various IBM geographic locations. This tool is used to administer responsibilities

and privileges within the Print @ IBM departments. These “responsibility groups” managed by the application include different team/department heads and members, as well as information

for contacting the various teams that are included.

The Pager tool is used to administer the paging system for the Print @ IBM teams. When a Severity 1 (highest level) issue comes up after-hours, this system can

be used to send text messages and emails to the person(s) currently on the schedule for that team/application. The online tool allows users to page team contacts, view and edit the

pager schedule, and add/edit contacts and their information.

The Badge Registration tool is used for those users who have physical building accesses and printing privileges within IBM. This tool can be used to register for

these privileges, or for an admin to remove access and privileges from an employee’s account with IBM.

The IVT (Invoice Verification Tool) is an accounting system used to verify the printing costs each month and quarter. Each geographic location that uses print

services internally with IBM is required to submit their printing cost invoices to this system. Within this system, some of the features for users include reviewing invoices,

viewing reports for various locations and/or timeframes, and reviewing the status of past/current invoices.

As a member of the GPWS team, I was the lead on an effort to clean up the SQL databases containing printer records. When devices are moved or deleted, their old records remain

in the tables with their status as “deleted”. These records are retained for the purposes of recordkeeping between the various other systems that connect to the database on the IBM

side. However, these large number of records over time create significant processing times for the creation of reports for Print @ IBM management users. It was determined that the

large number of “deleted” records was a very large reason for the processing time. In this effort, it was determined that the devices would be removed that had been “deleted” prior to

2016, and whose records had not been edited since 2019.

For this effort, I created several SQL commands to create one-time reports of the various records that would be deleted. First, I created commands to list all the

devices that fit the criteria listed above. Once this list was created, I then created commands that would look at the other tables related to the physical device (these other tables

include model and driver information, primarily) and determine if, once the old device records were deleted, the records in the related tables would no longer have any devices that

were related. If the table would no longer have a device associated, that mode/driver/etc. could be deleted. These commands were run in the development, test, and production

environments to create .CSV files for backup records, as well as have a report of all the records that would be deleted. SQL scripts were then written to delete all the database entries

that were included in the cleanup. All efforts were made first in the development environment, then were moved onto the test and production environments once testing had been completed

to ensure that the cleanup was successful.

Another large project I have undertaken in my role on the GPWS team is the creation of extensive documentation for the installation and setup of the software and

applications needed for the development environment. Previously, the only documentation that existed were piecemeal documents of small portions of this setup process, which were largely

incomplete even for the portion covered. These documents also became partially obsolete with the migration of our applications from traditional internal servers to external cloud servers.

What wasn’t in documentation either had to be relayed by older members (which quickly turns into a messy game of telephone as information is passed down) or re-learned by a new team member

if that information is not remembered or written down.

To this end, I compiled all the existing documentation into a single document. Then I began bringing the information up to date, as well as filling in the many gaps in

the process that existed. Once this information was collected and fleshed out, I used this document to create a Wiki. This setup guide will allow current and future members to have a single

source for setup documentation, as well as an environment where it is easy to update and add onto as the applications and environments change over time.

Over the last year and a half, I have been involved in the IBM/Kyndryl split. Kyndryl was created by IBM to split off it’s GTS (Global Technology Services) into a

separate publicly traded company. Some of the functions that were migrated into the Kyndryl space included functions that previously fell under the Print @ IBM umbrella. During this

split, I was involved in various planning sessions, meetings about logistics, and work to make sure that services moving to Kyndryl were moving with all the related services the teams

needed to function independently of IBM, as well as make sure that the split happened as smoothly as possible. My role in this split was minor, as the only thing that affected GPWS

directly was in our role maintaining the Print @ IBM timekeeping system. During the migration period, timekeeping between IBM and Kyndryl was a little messy and the GPWS team assisted

in allowing employees who were moving over to Kyndryl to still record their hours and show what hours would be IBM or Kyndryl’s responsibility. In the overall effort, GPWS was involved

in advising leadership of what services were necessary for the main functions expected to migrate to Kyndryl.

During the second half of 2021, following the Kyndryl split, there was an initiative to refresh printer devices worldwide. This refresh included verification of devices

at the various IBM sites globally. With this verification, there would be a large number of devices whose records would be updated, and many would be deleted from the current fleet. After

device verification, the remaining active devices would be assigned to one of two new cloud-based IBM servers, from their various worldwide traditional servers. The new servers would each

serve a distinct geographical area. The first would serve the EMEA (Europe, Middle East, and Africa) geographical location, and the second would serve the Americas as well as Asia Pacific

countries. For these new cloud-based servers, my team and I needed to make sure that our applications and databases were up and running before any devices could have their server assignments

updated. After this was completed, the various administrator-level users, known as Key Users, would be able to update each device under their responsibility group to use these new servers.

For these new servers, an update was needed to the logic that drives the server assignment when a print device is added or moved. The old logic included hard-coded values,

which is a big problem when attempting to make an application more "future-proof". I was responsible for creating new queries into our database that would correctly assign these devices,

without the use of any hard-coded values that would cause more work again in the future if any of the server data would be updated. These new queries are much more future-proof and will

still correctly assign a device to a server, even if the servers in the future are updated once more.

The Print @ IBM department is fully remote. Team members are spread throughout the US and worldwide, with most of our international teams located in India. This means

that as a member of the Print @ IBM department, I have been given the opportunity to collaborate with developers, vendors, and other IBM employees worldwide.

- Java

- SQL

- DB2

- HTML

- CSS

- JavaScript

- WebSphere and other IBM-Specific technologies

- SQuirreL and other SQL Clients

- Source Control Techniques and Best Practices

- Legacy Code Updates

- Software Development and Design

- Cooperation within a global team which was time-zone and continent separated

iMedX Inc. (June 2020 - November 2020)

I was a member of the US development team hired to develop iMedX's Analytics platform. The company's main software is found in medical transcription, and the Analytics

platform is meant to improve the insight for healthcare clients into their billing and payment data. The existing analytics platform was written using the ASP.NET framework.

Plans were in place for this to be eventually replaced by a React frontend, however, this was still in development when COVID-19 financial difficulties came to a head

and this team was laid off and the supervisor reassigned. I was hired as an AI Software Engineer, with the goal of migration to using AWS tools and technologies to

implement Machine Learning models on client data for better analytics predictions for our clients, specifically regarding the patterns and predictions that relate to

clients' billing contracts with insurance companies. To this end, I was on a project to streamline our core databases and tables so they could be used in AWS "data lakes"

which could later be used as a basis for the aforementioned Machine Learning models.

HIPAA training was part of my onboarding training for this position, as my role as a Software Engineer involved handling patient Personal Health

Information, in some cases. While the bulk of our development and testing datasets had been previously de-identified, more robust datasets existed which had not

been de-identified, as that process would remove some of the information that features needed to be tested upon. The de-identifed data is used for development primarily

so that any accidental security issues made during development would not cause any possible compromise of PHI.

Within this position, I had the opportunity to truly be a "full-stack" engineer, and was able work on portions of every aspect of the Analytics platform.

I had tasks that ranged from updating schemas of the underlying SQL tables, to creating and modifying stored procedures on SQL servers, to creating API frameworks for

different functions, to mid-level changes in the ASP.NET framework codebase, to making direct frontend experience changes. I also worked many tools while

performing these tasks, such as SQL Server Profiler, Visual Studio's Schema Compare, working within a remote desktop environment within a VPN network, Atlasssian's Jira,

and Postman API Development Platform.

One of my major projects during my employment at iMedX was the creation of an Alerts system which would trigger alerts to be created for different triggers.

These triggers would be checked each time that the dashboards which displayed the data relating to the alerts were refreshed. The call to the Alert Trigger stored procedure was

added to the SQL Server Agent job which did the rebuilding of the financial dashboards. The stored procedure is run for each of the "active" databases within both the Development

and Production servers. This procedure's parameters were the databasse name, the report type for which alerts are being created, and a list of the user roles for which the alert should

be created. The stored procedure first collected the relevant financial columns from each of the dashboard Reporting tables. This data was then aggregated into sums, and after being

summed, the specific trigger-relevant rates were calculated. The string of user roles was split and used to compile a table column of usernames that would be recipients of any

triggered alerts. A cursor was created to insert an alert row into the Alerts table for each of those usernames for each of the triggered alerts. This cursor was then deallocated.

The next time one of that list of users logs into the Analytics portal, they would see new alerts corresponding to the ones created by this stored procedure.

For the Alerts systems project, I created Entity Framework mappings and API calls so that the frontend application could interact with the underlying Alerts table on

the SQL Server. This allowed for the frontend to pull the alerts for the logged-in user, display and update the read/unread status of those alerts, and mark the alert "inactive". The

marking inactive was a soft delete, as that record would still be in the underlying table for record-keeping, but not displayed to the user.

In this position, being self-motivated was a major advantage for my productivity, as the bulk of my work was self-paced and unsupervised due to being a mostly remote

position. The local development team met at the datacenter locally once a week for a few hours, in order to get some in-person meeting time and work together on tasks that were better

suited towards collaboration and pair programming rather than independent working.

- ASP.NET Framework

- C#

- Visual Basic

- SQL/T-SQL

- SQL Server/SQL Server Management Studio

- HTML

- CSS

- JavaScript

- jQuery

- Source Control Techniques and Best Practices

- Legacy Code Updates

- Software Development and Design

- SQL Stored Procedure, Table, and General Schema Design and Updates

- Cooperation within a global team which was time-zone and continent separated

Centennial Arts (June 2019 - September 2019)

I developed software elements for use on customer websites, where I was responsible for creating new elements, bringing older elements into current standards,

and improving/documenting standards for use in the company's proprietary software. This software was similar to Adobe Dreamweaver, but the company's founder was

looking for things in an editor that weren't given by Dreamweaver, so he wrote his own. This software was run in a web portal on the company's internal software.

I was also responsible for meeting with the marketing and ad campaign staff members to ensure the website work that I was completing met all client needs and

expectations, as well as being ad campaign consistent and SEO-friendly.

These elements used HTML, CSS, JavaScript, and some PHP. The previous developer had hardcoded values into many elements, rather than leaving them

templated and letting the theme decide those values such as font family, text size, and text color. I would create new templated elements, removing these hardcoded

values and updating the HTML and CSS to the newest HTML5 and CSS3 standards. Within these older "bad" elements, many times CSS was contained in inline HTML rather

than being separated into its own file. This created problems if someone went to reuse an element with these inline formatting options, as inline formatting overrules any CSS file.

Another common problem was in the previous developer's usage of jQuery. Rather than referencing the server's jQuery library or even the online URL

to access jQuery, the developer would fill a JavaScript file with a "screenshot" of jQuery. This code hack would run the jQuery for that one element, but if you

tried to use that "screenshot" in a new copy for updating the element, it would no longer work. This code hack was extremely odd, and part of my responsibilities

became searching down elements using this tactic once it was discovered, as this is very out of industry standards and could introduce many issues. Ensuring this

was removed from production on any customer websites was one of my top priorities.

Along with the creation of elements, I created CSS "themes" for use on websites that would handle the overall theme for a site. This included things such

as fonts, font sizes for different types of text, link text decoration, etc. Once this theme was created, it would apply over the top of the website containing templated

elements. These elements were created as templates so they would be reusable on any customer website, rather than re-creating elements every time they were needed. The

theme of the website was mostly decided by the client, with input from the web development team to ensure that the client is satisfied while also maintaining design standards and practices.

The editor enforced certain standards in HTML, CSS, and JavaScript. One of the main functions of this was to restrict what CSS rules could be used, as

there are countless code hacks that can be done to manipulate formatting that do not fall into current (or any) standards. As I worked, things had been added to standards

or had not yet been added to the editor's standard enforcement. As these came up, I would be responsible for documenting the new standards in the internal system, then

implementing the inclusion of that rule in the editor's backend.

- HTML

- CSS

- JavaScript

- jQuery

- PHP

- Client Meetings and Negotiations

- SEO Techniques and Best Practices

- Legacy Code Updates

- Web Development and Design

This Website!

This website was hand-written using HTML5, CSS3, PHP, and JavaScript. I own this domain and use Hostsinger as my hosting platform. This site is responsive,

as well as being cross-browser and mobile-friendly. Using the XAMPP software, which allows running an Apache web server on localhost, I tested for compatibility across

Google Chrome, Firefox, Safari, Microsoft Edge, and Internet Explorer.

Hand-coding this site allowed me to truly manipulate elements and their responses as I desired, rather than using a platform such as Wix or WordPress to manipulate

a platform's limited selection of options and possibilities. The exception to this site being entirely written by me is the image gallery element used in this "Projects" page.

This element utilizes the Flexslider 2 jQuery plugin written by WooCommerce.

The source code for this website can be found in the GitHub repository linked in the "More Examples" tab below this section. This repo includes a webhook that triggers

the Hostsinger hosting service to re-deploy my website upon each commit to the main branch. Utilizing this webhook means that any update to the site is available virtually instantly

on the live version of the site, as deploying the site takes fractions of a second due to the fact there is no real compilation that needs to occur in order to deploy. Utilizing GitHub

for this website source allows me to maintain a change history. While for this type of personal project, a change history may not be the most useful tool, it is very good practice to

have a solid habit of maintaining, so that for workplace projects the GitHub process is second nature.

I used a small JavaScript function to remove the CSS formatting from text copied from the pages. I discovered that as I copied back and forth to work on the wording

of various sections, copying from the site caused the background color to come with it. This script is activated on copy and replaces the clipboard data with plain text. This

does not affect the HTML formatting, so things such as newlines, breaks, and tabs are not lost. This script only removes CSS formatting (text color, background color, size, and font

family in this case), making copying and pasting across platforms much more streamlined.

Each of the icons utilized are created from the editing of Creative Commons licensed clipart or icons into those that are used on the site. These are manipulated using GIMP,

a free, open-source alternative to Adobe Photoshop. Generally, a line art icon was selected, added over the mint green circle, and the line art either colored or made transparent, depending

on the need for that icon.

Additionally, I recreated an earlier version of this website as an ASP.NET web application. However, as my chosen domain hosting service, Hostsinger, is a Linux-based service, it is not supported

for hosting a Windows-based framework application. However, you can find my Azure repository containing this project under the "More Examples" dropdown in this section.

- HTML

- CSS

- JavaScript

- PHP

- jQuery

- Photoshop (equivalent)

- Web Design and Development

- Web Hosting

- SSL Encryption and Certification

- Domain Ownership

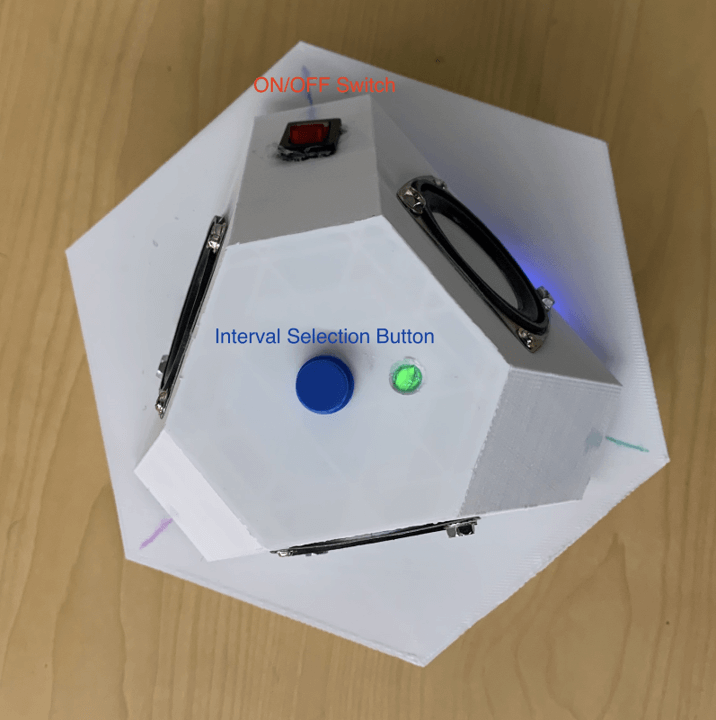

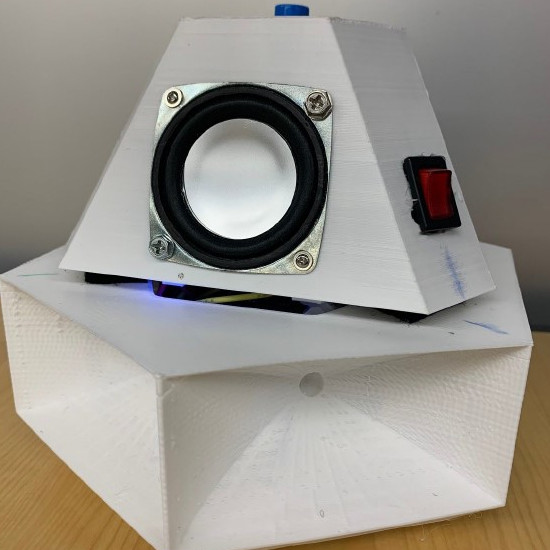

Rubble Listening Device

Course: Computer Systems Design

The professor who taught this course is part of a U.S. disaster relief team. This team is called in to respond to natural

disasters all over the country, assisting in the technology side of relief: piloting drones to see what is going on in an area that is

unsafe to send humans into, getting networks set up in the aftermath for relief workers to communicate using, and implementing new

technologies that improve disaster response, for example.

The class was presented with a list of projects that she and her team saw a need for in their experiences in these disaster

response situations. Students then "bid" upon projects that interested them or that they could best contribute to as a team member, outlining

what that person could bring to the team. My team was tasked with the creation of a device to "hear" survivors trapped under rubble. A very common

experience with survivors who are rescued from underneath rubble is that the survivor was yelling or banging on something, but nobody on the surface could hear them.

The project requirements were: the device must be self-contained and self-reliant (many disaster situations have limited to no

wireless networks on which to rely on), the device must be able to be operated and handled by someone wearing "bunker gear" or similar

movement-restricting gear, the device must record for 5 minutes, then wait a user-selected interval before the next recording, and the device

must be able to last 12 hours on a single charge.

Our team used the ReSpeaker Core v2, which very similar to the board used in the Amazon Echo. This board's microphones have a small level of base noise

cancellation to begin with, which we used to our advantage in further noise cancellation. This board has an array of six microphones that we used to get a "full picture"

of the surrounding area's noise. We used a variation upon an existing Digital Signal Processing algorithm, with the addition of a Fourier transform performed to further

reduce the noise in the sound. Python was used for the entirety of this project, as it is natively compatible with the ReSpeaker, as well as being very lightweight in terms

of memory space needed. With such a small device, memory size is a concern to begin with, as well as wanting to reserve as much space as possible for recording files.

For external controls, we used a single input button, as well as a power toggle switch. Additionally, an LED light on the top of the device was used to indicate

power status. Once powered on, the LED would light up green and the device would prompt the user to use this button to select the time interval between recordings. A choice

of 5, 10, 15, 30, or 60 minutes between recordings could be cycled through. This was done by pressing the button on the top of the device repeatedly, with the device audibly

voicing the selected interval. A long hold of the button confirmed the interval selection.

Once the interval was selected, the device would give a pre-recorded message informing anyone in hearing range that this device was powered on, that it would be

recording for the next five minutes to hear them and find them, and that they should shout or bang on metal after the message ended. The device would then commence its cycle

of recording and waiting, until the toggle switch was flipped, the SD card was filled, or the battery ran out. The pre-recorded message would play each time before recording audio.

Powering off the device while it was recording, or processing audio would cause the loss of that stage of the recording but would not cause a process lock to be active upon the

device's next power up.

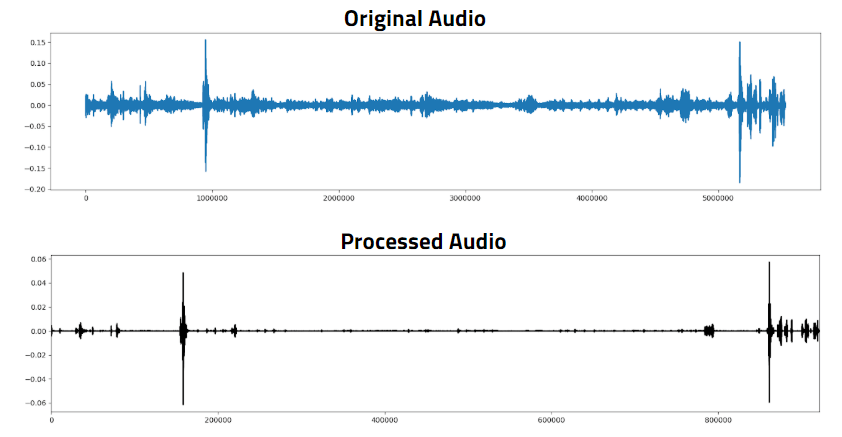

After the device was retrieved from the site, an SD card with the recordings could then be removed. This SD card would then be inserted into a compatible device,

and human eyes and ears would be necessary to find the "spikes" in the audio files, listen to these, and determine if this spike was the result of a survivor, or just an anomaly

in the overall noise of the disaster site.

Future work on this project would be to have a higher level of automation on the final sound files. Another script could be run which would be able to cut out and

timestamp these spikes, compiling them into an easier-to-digest file. This would reduce load on any human volunteer or worker, as they would only have to listen to the one file,

instead of searching a much larger file for the spikes which may or may not indicate a survivor.

- Python

- Analog to Digital Conversion and Processing

- Use & Manipulation of Python libraries

- Hardware Input & Interactions

- Software Algorithms

- Project Bid Process

- Real-World Problem Experience

- Client Acceptance Testing

- Client presentation

- Project Time Budgeting (Gantt charts, recordkeeping)

- Technical Documentation (proposals, technical update reports, non-technical user's manual, technical programmer's manual, and final technical report)

SaaS Sailing Inventory System

Course: Software Engineering

For this course, teams were challenged to find a client in the Bryan/College Station area, either a non-profit or organization. My team identified the Texas A&M Sailing

Team as a potential client. The Sailing Team had been relying upon a paper inventory system up to that point in time. The previous summer, the team's storage shed at Lake Bryan

had been broken into, which prompted a need to do a full inventory of the available equipment. Along with the larger items that were stolen or broken, the team found that many

small items that were checked out to individuals were missing (things such as oars and life jackets that were less likely to be stolen than the more expensive items). As a result

of these inventory discrepancies, the team was interested in moving to a new inventory system that would be easier to track inventory and who has checked out items than the paper system.

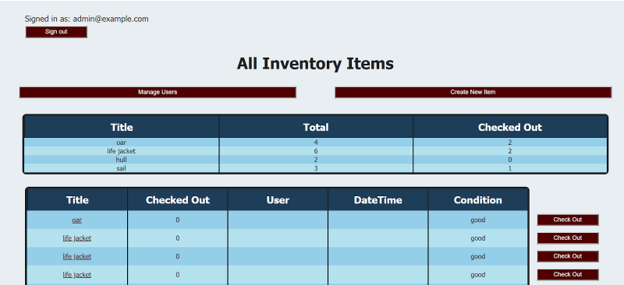

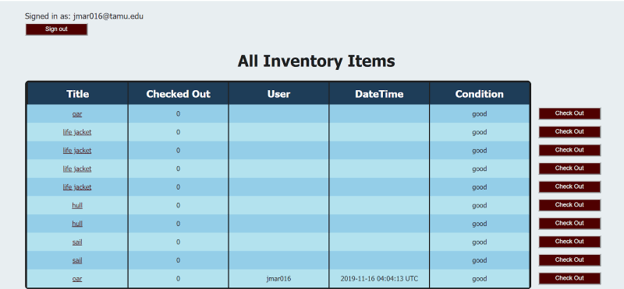

The backend of this project was built in Ruby on Rails. This consisted of the inventory database itself and the admin vs member access rules. The frontend was a

web portal written using HTML, CSS, JavaScript, and PHP. The project was deployed to Heroku using their Git-linked deployment terminal- based toolkit.

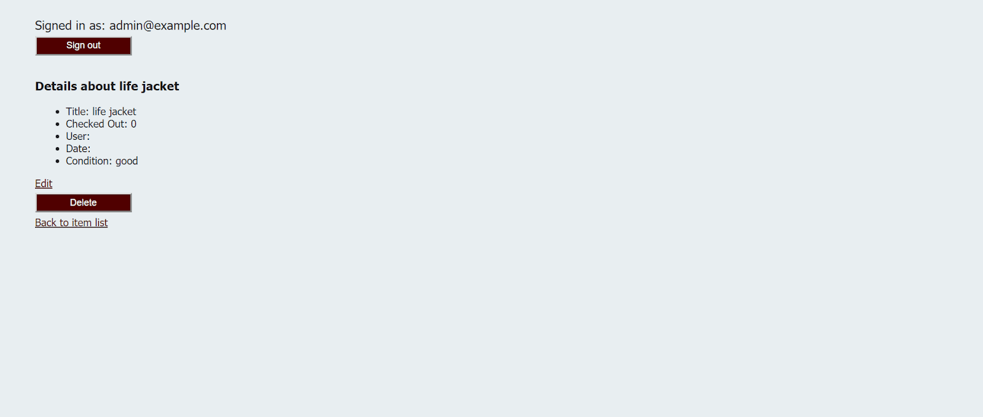

Our key features were: keeping track of inventory items and their status, adding, removing, and editing items to the database, sort the table on the web portal

side, having an Admin and Member views (so that Sailing team sponsors and officers were able to have more control over the inventory system than regular members), reporting

broken or missing items, and an email Admin notification system.   Our biggest struggle was in the email notification system. We were using an API for this purpose, but during

our testing, our automated test cases caused the API source to put a hold on the account for "suspicious activity". This was resolved, after contact with the API dev team.

We restricted user signup to only allowing a new user to sign up as a member. Admin users have access to user management and can promote and demote users between

Admin and Member status. This does introduce a potential security issue in that there is no safeguard against a "troll" account demoting all Admin users but themselves.

This security flaw was stressed to our client, making clear that adding any Admin users should be done with extreme caution and the Admin "team" verifying that new account's

information with the person they are promoting. Future work on this project would focus on patching this security hole first.

The team utilized GitHub for source management. As team members contributed to the development of features, we would each create a fork off the main project for

each feature added. Testing and development were done on virtual machines, team members using a mix of Cloud9, AWS, and Windows Hyper-V virtual machines running Ubuntu.

Automated testing was run on each commit and merge, so that no new addition introduced bugs to previously functional code. We used Agile and Scrum methodologies for sprints

in this project, being able to deploy new features as soon as they could be tested. We used RSpec and Cucumber to run BDD tests, as well as running functionality tests by

interacting with the software in production and development environments. RSpec is a BDD testing tool written specifically for Ruby and Ruby on Rails. Cucumber allows the

writing of test cases to be more in "real English" for feedback, which makes it extremely helpful to see if the features we implemented fulfilled the stated needs of that feature.

- Ruby on Rails

- SQL

- HTML

- CSS

- JavaScript

- Software as a System (SaaS)

- RSpec

- Cucumber

- Git/GitHub

- Heroku

- Agile Methodology

- Scrum Teams

- Iterative Development

- Behavioral Driven Development (BDD)Testing